ar/vr product design

Creating a VR shooting game to investigate implicit bias

Working as a researcher at the Relations and Social Cognition lab, I decided to replicatea video game called First-Person Shooter task (FPST), which simulates the experience of a police officer through a computer screen in Virtual Reality and look into whether we would see a similar trend of biased tendencies in a more realistic and immersive environment.

Background

In the original game, a White or Black target appears at a time at randomized locations in a randomized order. The game makes people decide whether to shoot or not-shoot within less than one second depending on if the target is armed or not-armed. We chose to adopt most of the existing task's paradigm, which measures the ratio of false decisions and reaction time from the moment of target detection until the decision to shoot or not-shoot.

Stakeholders

Stakeholder 1: Researchers

Our research team's concern was how valid the data could be. Other confounding variables, such as the head movement after the detection points, should be avoided.

Stakeholder 2: Institutional Review Boards(IRBs)

IRBs required our team to minimize the risk of potential trauma or injuries all by means. The entire game should be as short as possible and safe.

The Structure

The game starts with a three minute tutorial which teaches the participant how to use the appropriate buttons on the controller by shooting randomly generated bull's eye and board style targets. Five sessions, including one practice session, are followed after this tutorial. During those sessions, 48 human targets randomly appear within a 160 degree arc around the participant upfront. After each session, a 20 second intermission starts to give participants some rest to minimize the potential risk of motion sickness.

3D Modeling in Blender

The 2D images of targets were replaced with 3D human avatars. In total, ten avatars and six different objects have been created. Those avatars included three different turn-and-reveal animations designed to play when a target is detected by the participant.

From Detection to Shoot or Not-Shoot

The 2D images of targets were replaced with 3D human avatars. In total, ten avatars and six different objects have been created. Those avatars included three different turn-and-reveal animations designed to play when a target is detected by the participant.

Spatial Sound

Each target spawns with a directional audio sound so that a player could find the location of the target.

Detect (Aim)

Once a red reticle draws on a target's torso, the officer's gun appears up and activates the target.

Reveal

The target turns around, and the participant would now see the object the target is holding.

Shooting decision

The participant has less than one second to decide to shoot or not-shoot the target using a hand controller.

Initial Usability Test (N=13)

The goals of the testing

Did all participants understand the aim-to-shoot principle?

Is there any misleading instruction that facilitates unexpected behaviors during the main session?

To what extent does the game cause motion sickness?

Time over was the most common issue

We conducted game testing with 13 participants, tracking errors as false decisions or failures to respond in time ("Time Over"). The average error rate was 27.9%, with "Time Over" being the most common issue. Participants struggled to decide whether to shoot during the practice and first sessions. Post-test interviews revealed many didn’t understand how targets turned at the start. Observations showed some players moved their heads to aim, delaying their responses.

The poor job of the tutorial appears to be reflected in the user's game performance.

We needed a better tutorial that explains everything the participants need to know before starting the main sessions.

Reduction in error rate and the # of “Time over”

The tutorial should convey the aim-to shoot principle clearly to address the issue of high error rate during the first few sessions. The instruction should be able to remove behavioral variances that possibly could moderate the performance.

Enhanced Immersion to the police officer’s role

The tutorial should integrate learning throughout the session to immerse the user as the role of a police officer. The tutorial should not destroy the immersion. It should strengthen the immersion and It should let participants engage the game with excitement.

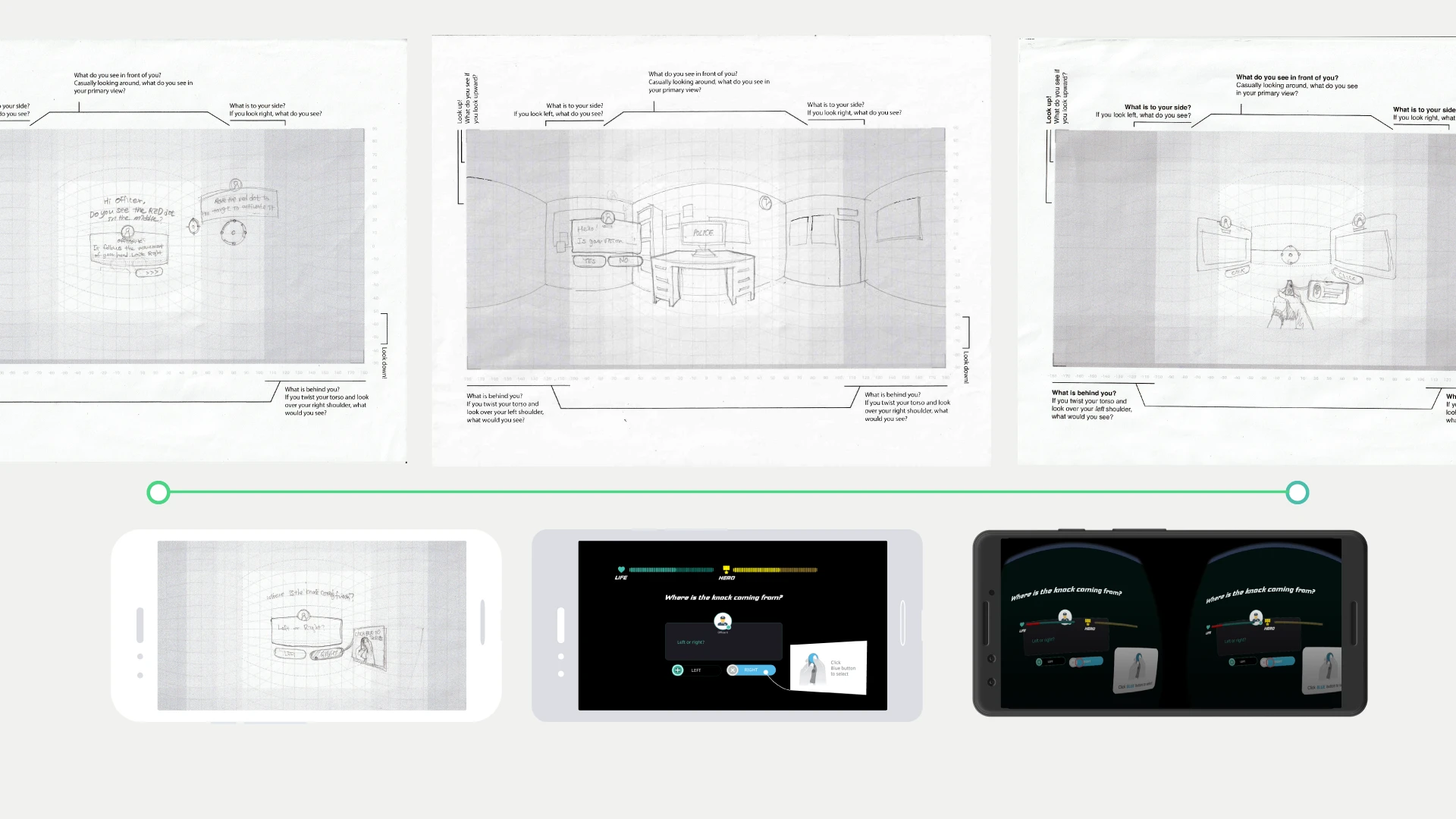

Design Explorations

First, we made a list of things that should be explained or checked before starting the main sessions.

Officer's Room

The player will collect Life Points and Hero Points as they pass “Head Tracking Navigation Check”, “Spatial Audio Check”, ”Display Focus Check”, and “Finding Gun & Badge Mission”.

Shooting Range

The player will attain the Life Points and the Hero Points as they master shooting a bad target and not-shooting a good target.

Prototype

First, we paper prototyped the two spaces: Police Officer's room and Shooting Range. We then built a hi-fidelity prototype of those spaces by using Unity.

Easy to learn in dark

The tutorial begins in the dark to emphasize the presence and function of the red dot.

The red reticle

The reticle dot is designed to disappear when a target activates. This short disappearance is to prevent a player from aiming back on the target after its' activation.

We added an image of a head tracking motion to help users understand that moving their head is how they aim at a target.

Gradual spatial immersion

The tutorial gradually enhances spatial immersion as the player verifies the game’s audio, display, & controller system work correctly.

Spatial Audio

The players will hear a door knock sound coming from the right. The game will ask the player which side did they detect the sound to emanate from. If the answer is correct, the players will be able to see the room in light. This includes the door from which the player heard the knocking sound.

A Mission Before Proceeding

Participants must find their badge and handgun during the tutorial to gain health and hero points. This scene helps players cognitively engage with and emotionally connect to the police officer character.

Engagment

Objects on the desk, chair, and cabinet drawers react interactively, moving and falling when the red reticle dot hovers over them. This subtly teaches players how the reticle interacts with the environment.

Firearm training

In the Shooting Range, players learn when to shoot or not by using the controller buttons. Targets appear randomly within a 160-degree field of view for practice.

Button control

After learning to use the controller to shoot and not-shoot, the participant will encounter some unidentified targets that spawn randomly within 160 degrees of the user's field of vision. The participant could practice locating these targets by using the audio cue, activating them by aiming, and then deciding to shoot or not-shoot.

Here, the outcomes and learnings from the project are highlighted.

Decreased Time-over

During the practice session, the average number of failing to decide (Time Over) has dropped from 13.15 to 5.81(per participant). This error rate has dropped from 45% to 30% during the practice session.

Interaction design

One key takeaway from this project was the impact of interaction design in VR. Well-designed visual and auditory cues guide attention and enhance understanding more effectively than text explanations.

Immersion Without Trauma

The challenge was balancing realism without traumatizing participants. Given VR’s rarity in this field, I made adjustments like seated gameplay and removed violent or fear-inducing elements, including gun sounds, blood, and dead bodies. I wonder if a more immersive design would yield different data.